ItemState management

Note: This documentation has been created based on jackrabbit-core-1.6.1.

The JCR API evolves around Nodes and Properties (which are called Items). Internally, these Items have an ItemState which may or may not be persistent. Modifications to Items through the JCR Session act on the ItemState, and saving the JCR Session persists the touched ItemStates to the persistent store, and makes the changes visible to other JCR Sessions. The management of ItemState instances over various concurrent sessions is an important responsibility of the Jackrabbit core. The following picture shows some of the relevant components in the management of ItemStates.

Core components and their responsibilities:

- A Session implementation is the starting point for interaction with a JCR instance. It is obtained by logging in through the Repository instance, which is not shown in the picture above. A Session reflects the clients view on the repository state, and manages transient changes of the client to the repository state which can be persisted by calling Sesssion.save(). A SessionImpl instance has a SessionItemStateManager, a HierarchyManager and an ItemManager.

- The SessionItemStateManager implements the UpdatableItemStateManager type (which extends the ItemManager type). Its main responsibilities are to provide access to persistent items, and to manage transient items (i.e., items that have been modified through the session but which have not yet been saved).

- The ItemManager is responsible for providing access to NodeImpl and PropertyImpl instances by path (e.g., the call Session.getNode("/A") will end up in the ItemManager. It uses the SessionItemStateManager to get access to ItemStates which it needs to create Node and Property instances for the client of the API.

- A HierarchyManager is responsible for translating paths to internal ItemId instances (a UUID for Nodes and a UUID + property name for Properties) and vice versa. It typically is session local as it must take the transient changes into account.

- The LocalItemStateManager isolates changes to persistent states from other clients. It does this by wrapping states obtained from the SharedItemStateManager. It also builds a ChangeLog instance that is passed on to the SharedItemStateManager when the session is saved.

- The SharedItemStateManager manages persistent states and stores states when sessions are saved using a PersistenceManager instance. It also updates the search index, synchronizes with the cluster and triggers the observation mechanism (all on session save).

Miscellaneous concepts:

- The ItemData type and subtypes are a thin wrapper around the ItemState type and subtypes. The ItemManager creates new Item instances every time it is asked for an item (e.g., via session.getNode("/A")). These items, however, point to the same NodeData instance which keeps track of whether the item has been modified (via its status field).

- Shareable nodes are a JCR 2.0 feature (see section 3.9 of the spec). It touches at least the ItemState, AbstractNodeData and ItemManager types.

- The NodeStateMerger type is a helper class whose responsibility it is to merge changes from other Sessions to the current Session. It is used in the SessionItemStateManager and the SharedItemStateManager. In the first case it is used in a callback function (called from the SharedItemStateManager) to merge changes in the persistent state to the transient state of the Session. In the second case it is used just before a ChangeLog is persisted to merge differences between the given ChangeLog and the persistent state.

Remarks:

- There are a number of implementations of the PersistenceManager interface. The managers from the bundle package are recommended. The AbstractBundlePersistenceManager also has a cache (the BundleCache) which caches persistent states.

- The locking strategy of the SharedItemStateManager is per workspace configurable through the ISMLocking entry in the repository descriptor.

The next sections show collaboration diagrams for various use cases that read and/or modify content.

Use case 1: simple read

The following is a collaboration diagram of what happens w.r.t. ItemState management in the Jackrabbit core when a Session reads a single existing node just after startup (i.e., Jackrabbit caches are empty).

- The client of the API has a Session already and asks it to get the node at path "/A".

- The Session delegates this to its ItemManager.

- The ItemManager does not have ItemData in its cache (a plain Map) for the requested item. It asks its SessionItemStateManager for the ItemState.

- The SessionItemStateManager does not have the item in its caches and delegates to the LocalItemStateManager.

- The LocalItemStateManager does not have the item in its caches and delegates to the SharedItemStateManager.

- The SharedItemStateManager does not have the item in its caches and delegates to the PersistenceManager which returns the "shared" ItemState after reading it from the persistent store (database).

- The SharedItemStateManager puts the item in its cache and returns it to the calling LocalItemStateManager.

- The LocalItemStateManager creates a new "local" ItemState based on the returned (shared) ItemState. The former refers to the latter as the "overlayed state". This basically is a copy-on-read action.

- This local ItemState is put in the cache of the LocalItemStateManager and returned to the SessionItemStateManager which returns it to the ItemManager.

- The ItemManager creates an ItemData instance and puts it in its cache,

- then it creates a new NodeImpl instance based on the ItemData and returns that to the Session which gives it to the client.

Remarks:

- The ItemManager returns a new Item instance for every read call. Two of such instances that refer to the same Item, however, have the same ItemData reference. This ensures that changes to the state of one instance are immediately visible to the second instance.

- The LocalItemStateManager creates a copy of the shared (persisted) ItemState managed by the SharedItemStateManager. The local state has the shared state as overlayed state. Similarly, if an Item is modified in the session, then a new transient state is created and managed by the SessionItemStateManager (see next use case). This transient state has the local state as overlayed state.

- Each ItemState has a container. This is the ItemStateManager that created it. Shared states have the SharedItemStateManager as container, local states have the LocalItemStateManager as container, and transient states have the SessionItemStateManager as container.

- A LocalItemStateManager registers itself as a listener with the SharedItemStateManager on creation. Similarly, a SessionItemStateManager registers itself as a listener with its LocalItemStateManager on creation. This listener structure is used for callbacks to make changes of other sessions visible (see next use cases).

Use case 2: simple write

Consider the situation in which we have just executed use case 1. I.e., the client has a reference to a NodeImpl. The following shows what happens when the client adds a single property to that node.

- The client invokes setProperty("prop A", "value") on the NodeImpl.

- The NodeImpl delegates the creation of a transient property state to the SessionItemStateManager.

- The SessionItemStateManager creates a new PropertyState,

- puts it in the transient store cache and returns it to the NodeImpl.

- The NodeImpl now must create a property item instance and calls the ItemManager for this with the new PropertyState.

- The ItemManager creates new property data based on the given state, puts it in its local cache, and

- creates a new PropertyImpl instance which it returns to the NodeImpl.

- The NodeImpl must still modify the NodeState to which the property has been added and delegates the creation of a transient state to the SessionItemStateManager.

- The SessionItemStateManager creates a new NodeState,

- puts it in its transient store and returns it to the NodeImpl which sets the name of the new property in its now transient state and returns the created PropertyImpl instance.

Remarks:

- The ItemManager has a cache of ItemData instances.

- Modification of a node creates a transient copy of the local ItemState.

Now suppose that another Session also adds a property to the same node. The following picture shows the relations of the various item state managers and the existing item states in the caches of the unsaved sessions.

The SharedItemStateManager has the shared state of the persistent node. It has two listeners: the LocalItemStateManagers of the existing Sessions. Each LocalItemStateManager has a local copy of the shared state, the local state which has the shared state as overlayed state, and has a SessionItemStateManager as a listener. Both SessionItemSTateManagers have a transient copy of their local state (the overlayed state) which contains the modifications (addition of a property), and also a transient state for the new property.

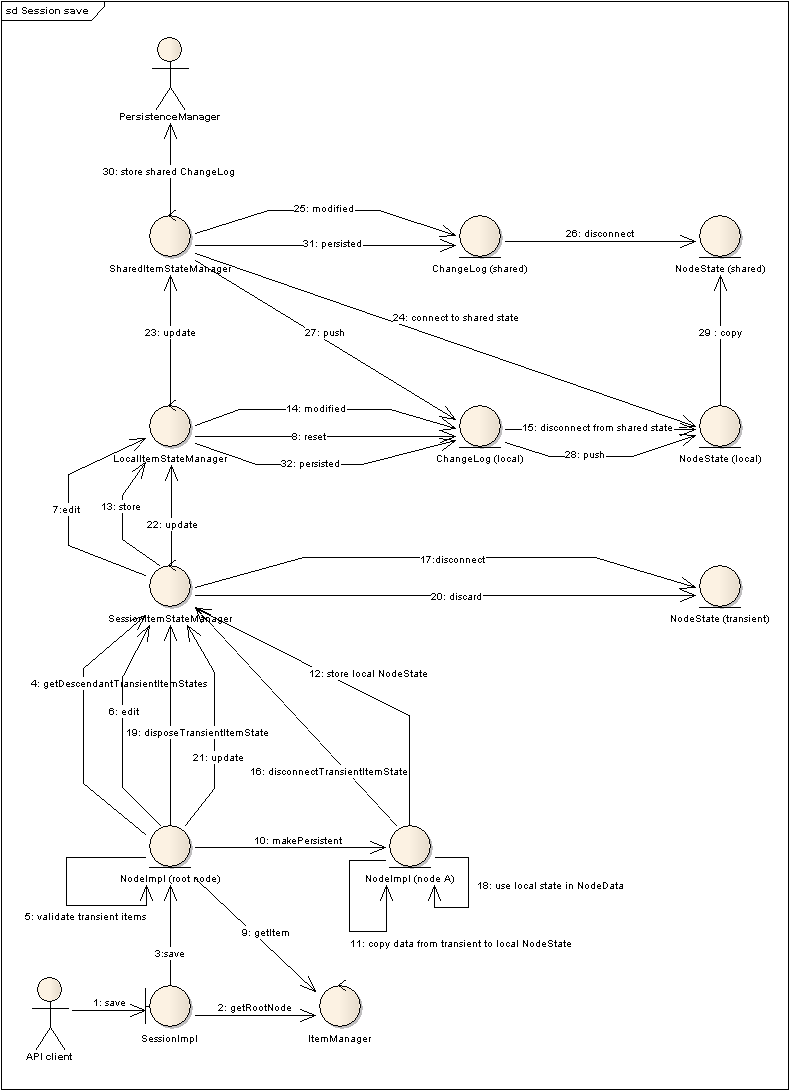

Use case 3: save

Consider the situation in which we have just executed use case 2. I.e., the client has added a single property to an existing node. The transient space contains two items: the new PropertyState and the modified NodeState of the Node to which the new property has been added. The following shows what happens when the session is saved. Note that only the modified NodeState instance is taken into account because otherwise the picture becomes even more unreadable. The handling of the new PropertyState however is approximately the same.

- The client calls Session.save().

- The SessionImpl retrieves the root node from the ItemManager.

- The SessionImpl calls the save method on the ItemImpl which represents the root node.

- The root NodeImpl retrieves the transient ItemStates that are descendants of itself (in this case the states of the modified node and the new property).

- The root NodeImpl does some validations on the transient space (checks that nodetype constraints are satisfied, that the set of dirty items is self-contained, etc.).

- The root NodeImpl starts an edit operation by calling edit on the SessionItemStateManager.

- The SessionItemStateManager delegates this to the LocalItemStateManager,

- which resets its ChangeLog instance.

- The root NodeImpl retrieves the first transient item from the ItemManager (assume that this is the modified node),

- and calls makePersistent on it.

- The NodeImpl instance of node "A" copies data from the transient NodeState to the local NodeState (i.e., to the overlayed state of the transient state).

- The NodeImpl instance calls store on the SessionItemStateManager with the local NodeState as argument.

- The SessionItemStateManager delegates this to the LocalItemStateManager which

- calls modified on its ChangeLog instance with the local state as argument. The ChangeLog records the local state as modified and

- disconnects the local state from its overlayed state (the shared state which is contained by the SharedItemStateManager).

- The NodeImpl instance of node "A" now asks the SessionItemStateManager to diconnect the transient state and it

- delegates this call to the state itself. Now the transient state of node "A" has no overlayed state.

- The NodeImpl instance of node "A" now uses the local state for its NodeData.

- The NodeImpl instance of the root node now disposes the transient item state of node "A" and

- the SessionItemStateManager calls discard on the transient NodeState of node "A" and removes it from its cache.

- The NodeImpl instance of the root node now calls update on the SessionItemStateManager which

- delegates this to the LocalItemStateManager, which on its turn

- delegates this to the SharedItemStateManager with the local ChangeLog as argument.

- The SharedItemStateManager reconnects the local state to the shared state managed by itself, merges changes from the local state and the shared state, and

- adds the shared state to the shared ChangeLog.

- The shared ChangeLog disconnects the shared state from its overlayed state (which does nothing because the shared state has no overlayed state).

- The SharedItemStateManager now pushes the changes from the local ChangeLog to the shared states.

- The local ChangeLog calls push on the local NodeState contained in its set of modified states.

- The local NodeState copies its own data to the overlayed shared state (which is contained in the shared ChangeLog).

- The SharedItemStateManager stores the ChangeLog to the persistent store and

- calls persisted on its shared ChangeLog. (Important note: this invokes callbacks that make the changes visible to other sessions. This is modeled in the next view.)

- After control is returned to the LocalItemStateManager it also calls persisted on ist local ChangeLog to invoke ItemStateListener callbacks.

Remarks:

- There are two ChangeLogs in use. One is the local ChangeLog which is assembled by the LocalItemStateManager. The shared ChangeLog is constructed from the local ChangeLog by the SharedItemStateManager. These are both essentially the same; the local ChangeLog is based on local states whereas the shared ChangeLog is based on shared states.

- During the save of a session information flows from the transient state to the shared state. The ItemImpl (and subtypes) copy transient item state information to the local state. The SharedItemStateManager calls push on the local ChangeLog to push the local changes to the shared states (and thus to the shared ChangeLog).

- During a save of a session information also flows back from the shared state to the transient and local states in other sessions. This is modeled in the next view.

Consider the situation in which we have just executed use case 2. I.e., the client has added a single property to an existing node. The transient space contains two items: the new PropertyState and the modified NodeState of the Node to which the new property has been added. Now suppose that there is another Session which also has added a property to that existing node. The following shows what happens when the session is saved (focus on callbacks from the SharedItemStateManager to the transient states in the second session).

- The API client saves the session.

- The update operation on the LocalItemStateManager is called (the local ChangeLog has already been constructed and the local states in that ChangeLog have been disconnected from their shared counterparts).

- The SharedItemStateManager is called to process the update in the given local ChangeLog.

- The states in the local ChangeLog are connected to their shared counter parts and merged (step 4') if they are stale. This is necessary because it could have happened that between step 22 and 23 another session on another thread was saved which changed states in the local ChangeLog (which are disconnected from their shared states). The detection of whether a state is stale is done via modification counter. If the merge fails, a StaleItemStateException is thrown and the save fails.

- When the merge succeeds the shared state is added to the shared ChangeLog which

- disconnects the shared state (which effectively does nothing as the shared state has no overlayed state).

- The changes in the local ChangeLog are pushed to the shared ChangeLog by

- invoking push on each local state in the local ChangeLog which

- copies the local state to the shared state.

- Then the shared ChangeLog is given to the PersistenceManager to persist.

- Now the changes are pushed down to other sessions. This is started by invocation of the persisted method on the shared ChangeLog.

- The shared ChangeLog calls notifyStateUpdated on each of its modified states.

- The modified shared state calls stateModified with itself as argument on its container, the SharedItemStateManager.

- The SharedItemStateManager calls stateModified with the shared state as argument on every of its listeners. These are at least LocalItemStateManagers of every session in the workspace.

- The SharedItemStateManager calls stateModified with the shared state as argument on a LocalItemStateManager of the other session.

- The LocalItemStateManager pulls in the changes by invoking pull on the local state (if it exists!).

- The state copies the information from its shared counterpart.

- The LocalItemStateManager now calls his listeners with as argument the local state.

- The SessionItemStateManager sees that a local state has been changed which has a transient state. It invokes the NodeStateMerger to try to merge the changes to the transient state.

- The NodeStateMerger tries to merge the local state with the transient state by e.g., merging the child node entries.

- ...

Remarks:

- The NodeStateMerger is used by the SessionItemStateManager to merge changes from other sessions to transient states. It is also used by the SharedItemStateManager to merge changes of other sessions to a local ChangeLog. This is also necessary because the states in a local ChangeLog are disconnected from their shared counterparts which prevents that persisted changes are pulled in.

Staleness detection, merging and synchronization considerations

Since different Sessions can be used concurrently (i.e., from different threads) it is possible that two Sessions modify the same node and save at the same moment. There is essentially a single lock that serializes save calls: the ISMLocking instance in the SharedItemStateManager. This is a Jackrabbit-specific read-write lock which supports write locks for ChangeLog instances and read locks for individual items. The read lock is acquired when the SharedItemStateManager needs to read from its ItemStateReferenceCache (typically when Sessions access items: the LocalItemStateManagers create a local copy of the shared state). The write lock is acquired in two places:

- When a API client saves its Session: the write lock is acquired in the update method which is called on the SharedItemStateManager by the LocalItemStateManager of the Session that is saved. It is downgraded to a read lock after the changes have been persisted just before the other sessions are being notified. The read lock is released after that.

- When an external update from another cluster node needs to be processed (see next section). The write lock is acquired and release in the externalUpdate method of the SharedItemStateManager. In between a ChangeLog from another cluster node is processed (i.e., applied to the shared states and pushed to the local states).

Thus, two threads can concurrently build their local ChangeLogs, but they need exclusive access to the SharedItemStateManager for processing their ChangeLog. During this processing a saving thread invokes call backs on the ItemStateListener interface (LocalItemStateManager and SessionItemStateManager implement that type) in other open sessions (via the persisted call on the shared ChangeLog in step 11). The intention of this listener structure is that open sessions get immediate access to the saved changes. Of course, there can be collisions which must be detected. Therefore, every ItemState instance has the following fields:

- an isTransient flag which indicates whether the state has been created by the SessionItemStateManager

- a modCount counter which essentially is a revision number of the underlying shared state (it is updated through the touch method called from the SharedItemStateManager)

- a status field which indicates the status of the state (one of UNDEFINED, EXISTING, EXISTING_MODIFIED, EXISTING_REMOVED, NEW, STALE_MODIFIED, STALE_DESTROYED)

The isStale method on the ItemState is used for determining whether an ItemState can be saved to the persistent store. The method of staleness detection depends on whether the state is transient. If it is, then a staleness is determined by checking the status field. If it is not transient, then staleness is determined by the modCount and the modCount of the overlayed state. It is used in three places:

- The makePersistent methods on the NodeImpl and PropertyImpl use it to throw an InvalidItemStateException if the transient state of the item has become stale.

- The stateModified call back method on the SessionItemStateManager uses it to determine whether a merge is attempted. If a merge failed, then the status is set to a stale value. In that way, merges are only tried if they have not yet failed for the state. (The ItemImpl.save method uses stale statusses to fail fast.)

- The SharedItemStateManager uses the isStale method to try a merge just before saving if a local state has become stale. This can occur because a local ChangeLog has been disconnected from the shared states. If another session then saves, it is possible that states in the local ChangeLog become stale (detected by the modCount).

Concurrency issues:

- Lack of synchronization between client-triggered operations such as (re)moving a node and call backs from other sessions that may affect the transient states and disrupt the client-triggered operation (by interleaving).

Clustering (external updates)

In clustered environments potentially every cluster node may write to the JCR. Because the JCR spec requires a certain level of consistency the synchronized access to the local SharedItemStateManager is not enough to make things work. The global idea of the clustering mechanism is the following:

- When the SharedItemStateManager saves a ChangeLog to the persistent store, it also writes a cluster revision (a representation of the just persisted ChangeLog) to some place where other cluster nodes can read it. Such a revision has a number, and every cluster node has a local revision number which indicates which revision entries they have processed.

- There is a global revision counter which act as a cluster-wide lock. Before a SharedItemStateManager can save a ChangeLog it must acquire this repository-wide lock. This happens at the begin of the Update operation with the call eventChannel.updateCreated(this). The locked global revision then becomes the number of the revision that is about to be written. Other cluster nodes now cannot save because they are blocked on the global revision lock.

- Just after the SharedItemStateManager (or actually the ClusterNode type) acquires the global revision lock it also reads revisions of other cluster nodes to get completely up-to-date. This might prevent the current ChangeLog from being saved because other cluster nodes may have saved incompatible changes.

- At the end of the _Update) update operation the global lock is released and other cluster nodes can save.

- In order to keep up to date with the latest revison, the ClusterNode type periodically reads revisions and pushes these via the SharedItemStateManager to any active Sessions.